Advertisement

The push toward smarter systems is reshaping everything—from how we search for answers to how businesses operate. But behind all the impressive breakthroughs in artificial intelligence, there’s a growing problem few outside the tech world are talking about: sustainability, more specifically, whether UK data centers can handle the soaring demands of AI without running themselves (and the planet) into the ground.

AI isn’t just another program you add to your server stack. It chews through data, requires huge models, and relies on layers upon layers of computations. All of that takes power. A lot of it. And now, the pressure is building on UK-based data centers to meet those energy needs without breaking sustainability goals or infrastructure capacity. Let’s break down where the real trouble lies.

You might think of AI as software—lines of code, algorithms, and tools that run in the background. But what's often missed is the hardware story. Every time you run a large AI model, you're turning up the heat—literally. These systems demand specialized chips, usually GPUs or similar, which run hot and require serious cooling. Add to that the fact that most advanced AI systems train on petabytes of data over days or weeks, and you start to see why power consumption quickly spins out of control.

UK data centers, historically built for cloud storage and business hosting, now face a demand profile they weren’t originally designed for. There’s a shift from general-purpose computing to high-performance AI computing, and it’s moving faster than upgrades can be made.

A single AI model can consume as much electricity as hundreds of homes over its training lifecycle. Multiply that by thousands of models running simultaneously, and suddenly you're in the red zone of the energy supply chart. National Grid’s forecast already hints at the issue: electricity demand from data centers could more than double by 2030.

There’s also the cooling issue, which is becoming harder to brush aside. UK climate conditions aren’t extreme, but even here, cooling is one of the most resource-intensive tasks in a data center. Traditional air conditioning, once sufficient, is struggling to keep up with the temperature spikes caused by AI-heavy workloads.

Some facilities are experimenting with liquid cooling systems and submersion tanks, where servers sit in temperature-stable liquids to disperse heat more efficiently. It sounds futuristic—and in some ways, it is—but these setups aren’t widespread yet. They require custom infrastructure and higher up-front investments, and most centers are still weighing how to justify the switch.

On top of that, water usage is turning into a flashpoint. Certain cooling systems rely heavily on evaporative methods, which are fine when used sparingly, but scale that up across dozens of locations and it's no longer a footnote. Several UK data centers are already being monitored for local water stress, especially in areas where climate change has brought irregular rainfall patterns.

The UK’s power grid wasn’t built with AI in mind. It was designed for gradual growth, distributed use, and manageable peaks. What’s now happening is more like a series of mini-power plants suddenly popping up around London, Manchester, and Slough—all demanding round-the-clock electricity.

There have been delays in connecting new data centers to the grid, particularly in and around London. In some zones, there's already a cap on how much new infrastructure can draw. AI demand is pushing up against those limits faster than anticipated.

Some operators are turning to on-site power generation to offset grid strain. This includes solar panels, battery storage, and, in a few cases, backup generators. But here’s the catch: while solar and battery storage help reduce carbon footprint, generators typically run on fossil fuels. So you end up with a trade-off—either you wait for grid approval or you keep your systems running at the cost of higher emissions.

It’s a lose-lose in some areas, and that’s prompting concern from regulators and sustainability bodies. The UK has net-zero targets, and energy-hungry AI centers don’t exactly align with that vision—unless the entire setup changes.

Faced with mounting pressure, some of the major players have started adjusting their strategies. A few examples:

Shift to renewable energy contracts: Some data centers now claim to be powered entirely by renewable sources. This doesn’t mean the electrons hitting the servers are green—it means they've purchased enough renewable energy credits to match their usage. It’s a good first step, but it’s not a perfect solution.

Load balancing with AI itself: Ironically, the same AI tools causing the issue are now being used to optimize energy usage. These systems can detect power draw patterns, shift tasks to off-peak hours, and tweak hardware configurations on the fly. Still, this is a drop in the ocean compared to the overall energy requirements.

Pledges and PR: The sustainability pledges are everywhere. “Carbon neutral by 2030,” “water positive by 2028”—you’ll find them in nearly every company report. But how those goals will be achieved remains fuzzy. The action plans are either light on detail or hinge on breakthroughs that aren’t commercially available yet.

One missing piece is collaboration. There’s very little unified effort among UK data center operators to tackle this problem together. Unlike the telecom sector, which built standards cooperatively, data centers are mostly going it alone. This slows down innovation and limits large-scale change.

Artificial intelligence might be the golden goose of today’s tech economy, but keeping it alive takes more than silicon and code. For the UK’s data centers, it’s becoming a serious sustainability test—one that demands more than pledges and patchwork fixes. Power demands are climbing. Cooling systems are stretched thin. The grid is creaking under new pressure. And while a few operators are taking smart steps, the pace of action isn’t keeping up with the pace of change.

AI may be leading us toward smarter machines, but the question now is whether we’re managing the infrastructure smartly enough to sustain it. That’s a conversation the UK can’t afford to delay much longer.

Advertisement

Can a robotic puppy really help ease dementia symptoms? Investors think so—$6.1M says it’s more than a gimmick. Here’s how this soft, silent companion is quietly transforming eldercare

Why is Alibaba focusing on generative AI over quantum computing? From real-world applications to faster returns, here are eight reasons shaping their strategy today

Learn about landmark legal cases shaping AI copyright laws around training data and generated content.

What happens when robots can feel with their fingertips? Explore how tactile sensors are giving machines a sense of touch—and why it’s changing everything from factories to healthcare

If you want to assure long-term stability and need a cost-effective solution, then think of building your own GenAI applications

How Orca LLM challenges the traditional scale-based AI model approach by using explanation tuning to improve reasoning, accuracy, and transparency in responses

How using Hugging Face + PyCharm together simplifies model training, dataset handling, and debugging in machine learning projects with transformers

Learn different ways of executing shell commands with Python using tools like os, subprocess, and pexpect. Get practical examples and understand where each method fits best

How the EV charging industry is leveraging AI to optimize smart meter data, predict demand, enhance efficiency, and support a smarter, more sustainable energy grid

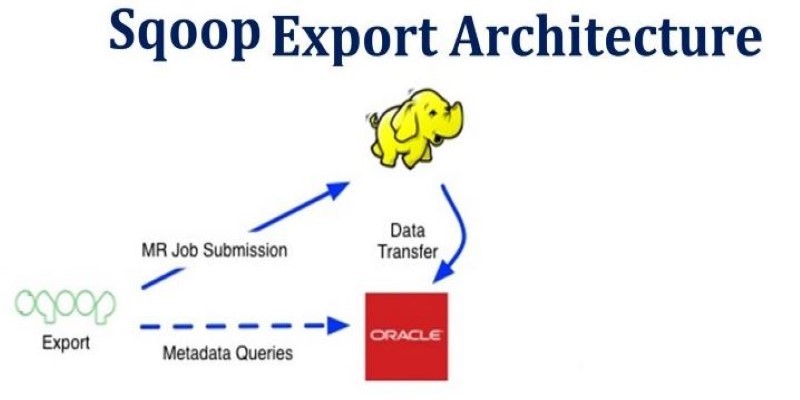

How Apache Sqoop simplifies large-scale data transfer between relational databases and Hadoop. This comprehensive guide explains its features, workflow, use cases, and limitations

Google's Willow quantum chip boosts performance and stability, marking a big step in computing and shaping future innovations

Understand how GPT's decoder-only transformer works, its advantages, challenges, and why it is transforming the future of AI