Advertisement

A robot that can feel is no longer a fantasy. Scientists have been working for decades to mimic human touch in machines, but progress was slow and clumsy—until now. In a new wave of development, engineers have built robots equipped with tactile sensors right at the fingertips. These sensors can detect subtle pressure, texture, and resistance, giving the robot something very close to a sense of touch. Unlike cameras or microphones, which observe from a distance, touch happens up close. And for a robot, touch means control. Not just gripping, but adjusting in real-time, like humans do without thinking.

At the heart of this progress is sensor design. Tactile sensors are built to detect physical interaction. They're often made of flexible materials layered with electronics that respond to pressure. When installed at the robot's fingertips, these sensors send feedback to an internal processor, much like how your skin sends signals to your brain. The robot doesn’t feel emotions, of course—but it registers contact and reacts.

A simple version of this might allow a robot to tell whether it's holding a soft sponge or a hard piece of metal. A more advanced version, however, allows it to respond to differences in surface texture, object shape, or changes in grip tension mid-task. This is a breakthrough in tasks that require precision, such as assembling tiny components or handling delicate materials. Tactile feedback enables the machine to slow down, speed up, or adjust pressure according to the material it's touching.

Different kinds of tactile sensors exist. Some are resistive, meaning they detect changes in electrical resistance when pressure is applied. Others use capacitive sensing, similar to how smartphone screens respond to fingers. There are also optical, piezoelectric, and magnetic sensors, depending on the type of touch sensitivity required by the application. In some high-end robots, engineers combine different types to achieve multi-layered sensing, encompassing pressure, vibration, and position detection all in one fingertip.

The leap here isn't just adding more sensors—it's putting them exactly where the touch matters. On the fingertips, close to the action. This dramatically improves hand-eye coordination in robotic arms, which until now relied mostly on visual data or pre-programmed movement.

The addition of tactile sensors on robot fingertips solves a long-standing problem: lack of precision in real-world tasks. Robots perform well in controlled environments, but unpredictability—such as different shapes, soft materials, and human interaction—often defeats them. Giving robots a sense of touch helps them adapt in real-time without being pre-programmed for every scenario.

In warehouses and factories, this matters. A robot with fingertip sensors can pick up irregular items without crushing or dropping them. It can pack boxes quickly yet gently. In surgery, a robotic assistant could distinguish tissue types by touch, helping doctors perform delicate procedures. For elderly care or home use, a robot that can safely pass a cup or help someone dress becomes far more practical.

Touch-sensitive robots also tackle a major bottleneck in automation: handling flexible or fragile items reliably. Current machines often drop soft objects or press too hard. With tactile sensors, robots adjust mid-motion to prevent these failures. It adds a layer of physical judgment that was missing.

The idea of robot tactile sensors isn’t new, but their placement and sensitivity have improved. Older systems had sensors scattered across the hand, too far from the point of contact for immediate feedback. Now, sensors directly on fingertips make the interaction more natural and effective, unlocking tasks that once required human finesse.

Even with breakthroughs, giving a robot the sense of touch remains harder than it seems. Human skin is packed with nerves—pressure, temperature, pain, and position sensors all layered in a small space. Replicating that in machines is a long-term challenge. Most robotic fingertip sensors today only measure pressure. That allows a robot to adjust its grip or detect slippage, but it is still far from human ability.

Durability is another problem. Tactile sensors often utilize soft or flexible materials that wear out more quickly in industrial settings. Keeping them reliable under constant use or harsh conditions takes smart design and maintenance. Power use is also a concern—running many sensors and processing real-time data strains energy efficiency, especially in battery-powered robots.

Interpretation is just as hard. Touch data is messy and constant. For a robot to understand what it’s touching, it needs fast, accurate data processing. Machine learning helps here. Algorithms trained to match tactile input with object properties let the robot learn from experience. Over time, robots handle unknown objects and adjust their grip based on past interactions.

Even the best touch-sensitive robots remain limited. They can’t sense temperature changes or emotional signals through touch. But they’re becoming better at what matters—physical awareness. And that changes how they function in human spaces..

As tactile sensor technology improves, the range of use cases is growing. One fast-growing area is prosthetics. Robotic hands with fingertip sensors give users pressure feedback, helping those with prosthetic limbs grasp objects with more control. It doesn’t fully restore touch, but gives back something once impossible—judgment by feel.

In agriculture, robots with fingertip sensors could harvest fruit or check ripeness without damaging crops. In restaurants or food factories, they could handle baked goods or soft produce with less waste. In space, touch-sensitive robots might repair spacecraft where human hands can’t reach.

The market is responding. Startups and labs are racing to develop sensors that are thinner, faster, and cheaper. Integration with AI is getting tighter. Robots no longer just follow—they respond. And for that, touch is key. The focus on touch-sensitive robots shows a shift from automation based only on sight and structure to one that includes physical intuition.

Robots don’t need emotions to be more useful. They just need to feel contact. Tactile feedback gives them that edge, making them less like stiff machines and more like adaptable tools that work with us, not just around us.

Robots that see and hear are common, but teaching them to touch is a major step forward. With fingertip tactile sensors, machines gain control, flexibility, and precision. They start to act less like rigid tools and more like collaborators. From factories to hospitals, touch opens new possibilities for robots to handle delicate, complex tasks once thought impossible, reshaping their role in work and care, right at their fingertips.

Advertisement

How the EV charging industry is leveraging AI to optimize smart meter data, predict demand, enhance efficiency, and support a smarter, more sustainable energy grid

Learn everything about Stable Diffusion, a leading AI model for text-to-image generation. Understand how it works, what it can do, and how people are using it today

How the semi-humanoid AI service robot is reshaping commercial businesses by improving efficiency, enhancing customer experience, and supporting staff with seamless commercial automation

How the ORDER BY clause in SQL helps organize query results by sorting data using columns, expressions, and aliases. Improve your SQL sorting techniques with this practical guide

Learn about landmark legal cases shaping AI copyright laws around training data and generated content.

How agentic AI is driving sophisticated cyberattacks and how the UK AI Opportunities Action Plan is shaping industry reactions to these risks and opportunities

Sisense adds an embeddable chatbot, enhancing generative AI with smarter, more secure, and accessible analytics for all teams

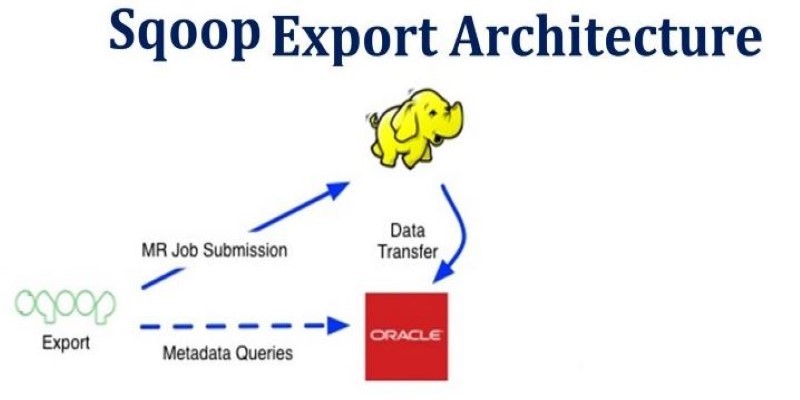

How Apache Sqoop simplifies large-scale data transfer between relational databases and Hadoop. This comprehensive guide explains its features, workflow, use cases, and limitations

What happens when blockchain meets robotics? A surprising move from a blockchain firm is turning heads in the AI industry. Here's what it means

How using Hugging Face + PyCharm together simplifies model training, dataset handling, and debugging in machine learning projects with transformers

What happens when an automaker lets driverless cars loose on public roads? Nissan is testing that out in Japan with its latest AI-powered autonomous driving system

How the Vertex AI Model Garden supports thousands of open-source models, enabling teams to deploy, fine-tune, and scale open LLMs for real-world use with reliable infrastructure and easy integration