Advertisement

Video understanding was locked behind massive models and specialized hardware for a long time. Devices without high-end GPUs were simply left out of the loop. That gap has started to close with SmolVLM2, a small but surprisingly capable vision-language model designed for video analysis on almost any device.

SmolVLM2 isn’t just a lighter version of what already exists—it reimagines how models process multimodal inputs, trims the fat where it counts, and still delivers strong performance. The goal is simple: make video understanding widely accessible without sacrificing usefulness, even for users with limited computing power.

SmolVLM2 stands out for its ability to run on-device while maintaining decent accuracy. It was trained to process video frames, visual cues, and text-based prompts like larger models usually do. But instead of needing data centers to operate, it was optimized to run directly on smartphones, laptops, edge devices, and embedded systems. This changes things significantly—particularly for real-time tasks like captioning, event detection, or understanding video instructions in low-resource environments.

Unlike earlier lightweight models that struggled with video, SmolVLM2 uses frame-efficient sampling and compact temporal encoding. It doesn't try to look at everything. Instead, it learns to focus on keyframes that matter most in a sequence. This drastically reduces the need for processing without making the model blind to context. The result is a system that can generate a meaningful summary, answer questions about video scenes, or match moments to descriptions—all within the power envelope of regular consumer hardware.

SmolVLM2 was built on top of a dual-stream architecture. One stream handles vision—frames pulled from videos in a structured way—while the other works with text. These streams don't operate in isolation; they're fused through a series of learned attention modules, keeping the context tightly linked. Each modality informs the other, helping the model stay grounded in what's being seen and asked. This makes SmolVLM2 effective for use cases like video QA, moment retrieval, and generating text captions from clips.

Training a model like SmolVLM2 requires a careful balance between scope and efficiency. It uses a distilled training method, where knowledge from a larger, powerful teacher model is compressed into a smaller version. The distillation process is guided by performance on video-language datasets like MSR-VTT, ActivityNet Captions, etc. These datasets helped the team create a model that generalizes well across tasks without requiring millions of parameters.

The model's tokenizer and visual encoder are both designed for speed. Frames are compressed, encoded into embeddings, and processed through fewer layers than a standard transformer stack. Text is handled through a lean tokenizer built to preserve essential semantics with fewer tokens. Because of these optimizations, SmolVLM2 consumes far less memory and computing power than traditional large-scale video-language models while offering decent performance on benchmarks.

One of the biggest technical improvements is the temporal encoder. Many models struggle to capture changes across time meaningfully. SmolVLM2 applies a clever technique that identifies temporal landmarks—frames where something significant changes—and pays more attention to them. This makes the model far more efficient than brute-force frame-by-frame analysis.

SmolVLM2 is already proving useful in areas that haven’t historically had access to real-time video intelligence. For mobile developers, this opens up a range of applications: AR systems that can respond to the visual environment, assistive tools for visually impaired users, educational apps that interpret instructional videos on the fly, and even smart surveillance that runs directly on low-cost cameras.

Another strong example is in robotics. Many small-scale robots or drones lack the power to run large models but still need to process video feeds in real time. SmolVLM2 allows these devices to extract semantic meaning from the world around them without offloading data to the cloud, reducing both latency and dependency on network connections.

Developers on localization and translation have also started testing SmolVLM2 for scene narration and instruction in different languages. Since the model is designed to work well across various visual and language contexts, it adapts easily to multilingual tasks. Combined with lightweight speech-to-text modules, it can provide full multimodal input-output flows, all on-device.

SmolVLM2 also becomes a strong candidate for edge AI deployments in rural or bandwidth-constrained settings by lowering the entry barrier. Education platforms in underserved regions, healthcare applications running on portable machines, or public infrastructure tools that monitor without external dependencies benefit from something small, smart, and flexible.

SmolVLM2 isn’t a one-off model—it’s part of a broader shift toward efficient AI that doesn’t depend on constant cloud access. With rising demand for private, local inference and tighter data regulations, on-device intelligence is becoming less of a bonus and more of a necessity.

Its open architecture and transparent training make it easier for researchers to fine-tune specific domains like sports, surveillance, or healthcare. This flexibility means SmolVLM2 can easily evolve beyond its original dataset and adapt to new contexts.

Smaller models use less energy, train faster, and better align with sustainable tech goals. SmolVLM2 proves that building smarter systems doesn’t always mean building bigger ones.

Enabling high-quality video understanding within strict hardware limits challenges the assumption that multimodal AI must be resource-heavy. It makes intelligent processing possible on phones, drones, or even simple sensors—bringing AI closer to where it’s needed.

SmolVLM2 brings advanced video understanding to devices that couldn't previously handle it. With compact architecture and efficient training, it delivers real-time video-language capabilities without relying on large-scale infrastructure. It proves that smaller models can still perform meaningful tasks, from assistive tools to embedded systems. As the demand for accessible, device-friendly AI grows, SmolVLM2 sets a practical example of what's possible. It's designed not for more data but for smarter use, making video analysis achievable anywhere, whether on your phone, a drone, or a compact edge device.

Advertisement

Know how to reduce algorithmic bias in AI systems through ethical design, fair data, transparency, accountability, and more

How using Hugging Face + PyCharm together simplifies model training, dataset handling, and debugging in machine learning projects with transformers

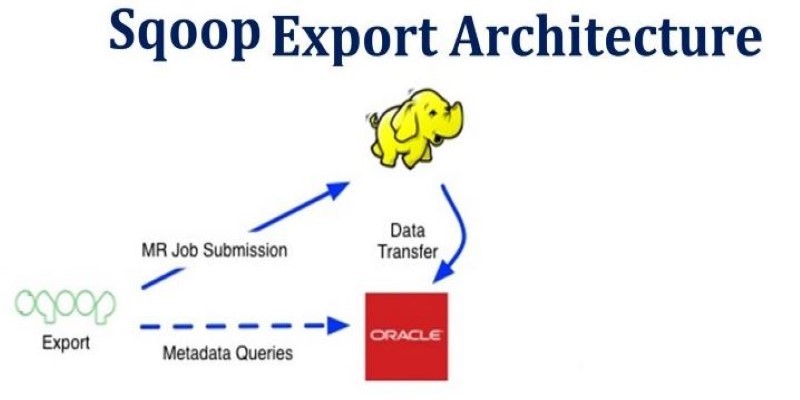

How Apache Sqoop simplifies large-scale data transfer between relational databases and Hadoop. This comprehensive guide explains its features, workflow, use cases, and limitations

What happens when an automaker lets driverless cars loose on public roads? Nissan is testing that out in Japan with its latest AI-powered autonomous driving system

How Orca LLM challenges the traditional scale-based AI model approach by using explanation tuning to improve reasoning, accuracy, and transparency in responses

Is the UK ready for AI’s energy demands? With rising power use, outdated cooling, and grid strain, the pressure on data centers is mounting—and sustainability may be the first casualty

Looking for a reliable and efficient writing assistant? Junia AI: One of the Best AI Writing Tool helps you create long-form content with clear structure and natural flow. Ideal for writers, bloggers, and content creators

Explore the role of a Director of Machine Learning in the financial sector. Learn how machine learning is transforming risk, compliance, and decision-making in finance

If you want to assure long-term stability and need a cost-effective solution, then think of building your own GenAI applications

Microsoft’s in-house Maia 100 and Cobalt CPU mark a strategic shift in AI and cloud infrastructure. Learn how these custom chips power Azure services with better performance and control

How MobileNetV2, a lightweight convolutional neural network, is re-shaping mobile AI. Learn its features, architecture, and applications in edge com-puting and mobile vision tasks

SmolVLM2 brings efficient video understanding to every device by combining lightweight architecture with strong multimodal capabilities. Discover how this compact model runs real-time video tasks on mobile and edge systems