Advertisement

Artificial intelligence continues to shape everyday experiences, and two major announcements underscore its deep integration into sports, business, and technology. IBM revealed its expanded AI features for the 2025 Masters Tournament, promising a more immersive and intelligent way for fans to engage with one of golf's most prestigious events.

Meanwhile, Meta has introduced its latest Llama 4 AI models, designed to further advance open-source language technology. These developments show how AI isn't just staying in labs or boardrooms—it’s finding meaningful uses that impact millions of people in real, practical ways.

For over two decades, IBM has partnered with the Masters Tournament to bring digital innovation to Augusta National Golf Club. For 2025, IBM is adding new AI-driven tools aimed at giving fans smarter, faster, and more personal access to the tournament’s stories and stats. This year’s enhancements build on IBM’s cloud and machine learning work from previous years but with sharper precision and more user-focused results.

One standout addition is a smarter commentary system. Using natural language processing and computer vision, IBM’s AI can now analyze live video, detect key moments in real-time, and generate context-rich highlights almost instantly. Instead of generic replays, viewers can access detailed clips with insights about a player’s technique, historical comparisons, and even predictive analysis on how their performance may play out through the round. Fans watching from home or on mobile devices will have access to streams that feel more personal and informative, rather than just watching passively.

The new AI features also include improved player tracking on the Masters digital platforms. Fans can follow their favorite players not just by hole and score, but also by advanced metrics, such as shot placement patterns and estimated risk-reward strategies. IBM's system synthesizes vast amounts of tournament data to tell a clearer story about the game's flow. It doesn't stop at stats either — it offers narrative suggestions based on patterns, such as which underdog is quietly making a move or who's most likely to have a breakout round.

Perhaps most intriguing is IBM's focus on accessibility. Their updated Master's app will include voice-based queries and AI-assisted navigation designed for fans who are visually impaired or simply prefer spoken responses. By making its AI inclusive, IBM is signaling that these tools are not only about sophistication but about reaching wider audiences in ways that feel natural and intuitive.

While IBM is channeling AI into one event, Meta is aiming to redefine how developers and researchers use language models with its Llama 4 release. The Llama series—short for Large Language Model Meta AI—has been Meta's response to proprietary offerings from competitors like OpenAI and Google, with a focus on open-source development.

Llama 4 represents a major upgrade in capability and efficiency. Available in several model sizes to suit different use cases, Llama 4 is designed to handle a wider variety of languages, understand more nuanced context, and produce more consistent output. It can write, summarize, translate, and even generate code more accurately than its predecessors.

Meta has worked to improve Llama 4's accessibility to developers while keeping performance high. This version introduces enhanced fine-tuning options, enabling organizations to tailor the models to their specific needs without requiring massive infrastructure or teams of engineers. Developers can now train Llama 4 on their data, creating more relevant and trustworthy outputs.

Another notable change is in safety and reliability. Meta has invested heavily in reducing harmful biases and hallucinations in the responses generated by Llama 4. This makes it more suitable for educational, research, and creative projects where factual consistency and ethical outputs matter. The company’s open approach invites feedback and contributions from the global AI research community, helping to improve the models collectively rather than keeping them behind closed doors.

For businesses and educators, Llama 4 offers a practical alternative to commercial AI models. It can power chatbots, generate learning materials, and assist in content creation with a lower barrier to entry. Smaller startups and research labs in particular stand to benefit from the balance Llama 4 strikes between quality and openness.

Both IBM’s and Meta’s announcements illustrate how AI is moving beyond abstract potential and into tangible, useful products. At the Masters, IBM is showing how AI can enhance tradition rather than replace it. Fans get more from the experience — more information, more context, more connection — without changing the essence of the tournament itself. This reflects a growing trend where AI is used to support and enrich existing human-centered experiences, rather than overwhelm them.

Meta’s Llama 4 launch, on the other hand, speaks to the push toward democratizing advanced technology. By keeping its models open and adaptable, Meta is giving smaller players a chance to experiment and innovate without relying on expensive proprietary systems. This fuels creativity and helps more people gain hands-on experience with artificial intelligence.

Another shared thread is the emphasis on accessibility. IBM is explicitly designing its AI features at the Masters to include people with different needs and preferences, while Meta is releasing tools that can be fine-tuned by anyone. Both companies are acknowledging that AI must serve diverse users, not just elite specialists or technologists.

These projects also underline the importance of trust in AI. IBM’s enhancements are presented as aids to understanding rather than black-box decisions. Meta is investing in transparency by making its models open and improving safety measures. Trust and clarity are becoming central expectations among users, and these two efforts are responding to that demand.

IBM’s AI upgrades for the 2025 Masters and Meta’s Llama 4 models highlight how deeply artificial intelligence is embedded in daily life. IBM enhances the fan experience while preserving golf traditions, and Meta offers open, adaptable tools for global developers. Both prioritize inclusion, transparency, and practical use. AI is no longer abstract—it shapes how we watch, learn, and create, raising questions about its thoughtful, broad application in the future.

Advertisement

Beginner's guide to extracting map boundaries with GeoPandas. Learn data loading, visualization, and error fixes step by step

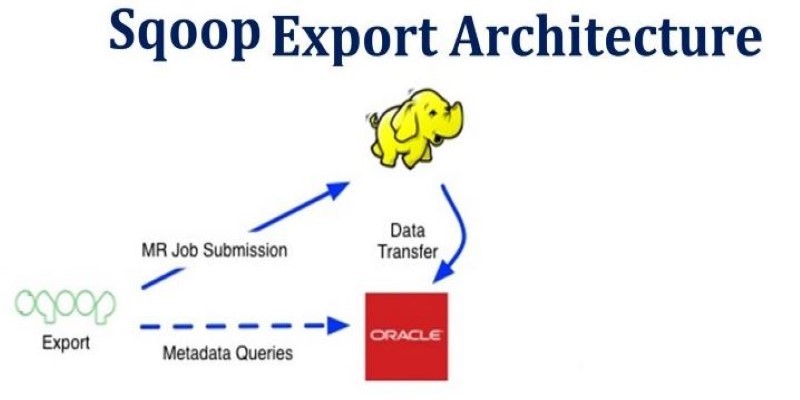

How Apache Sqoop simplifies large-scale data transfer between relational databases and Hadoop. This comprehensive guide explains its features, workflow, use cases, and limitations

How to Integrate AI in a Physical Environment through a clear, step-by-step process. This guide explains how to connect data, sensors, and software to create intelligent spaces that adapt, learn, and improve over time

Explore the top 11 generative AI startups making waves in 2025. From language models and code assistants to 3D tools and brand-safe content, these companies are changing how we create

How MobileNetV2, a lightweight convolutional neural network, is re-shaping mobile AI. Learn its features, architecture, and applications in edge com-puting and mobile vision tasks

Which data science companies are actually making a difference in 2025? These nine firms are reshaping how businesses use data—making it faster, smarter, and more useful

How the ORDER BY clause in SQL helps organize query results by sorting data using columns, expressions, and aliases. Improve your SQL sorting techniques with this practical guide

How AI is shaping the 2025 Masters Tournament with IBM’s enhanced features and how Meta’s Llama 4 models are redefining open-source innovation

Looking for a reliable and efficient writing assistant? Junia AI: One of the Best AI Writing Tool helps you create long-form content with clear structure and natural flow. Ideal for writers, bloggers, and content creators

Google's Willow quantum chip boosts performance and stability, marking a big step in computing and shaping future innovations

Learn about landmark legal cases shaping AI copyright laws around training data and generated content.

How the EV charging industry is leveraging AI to optimize smart meter data, predict demand, enhance efficiency, and support a smarter, more sustainable energy grid