Advertisement

Artificial Intelligence has moved beyond computer screens into the spaces we live and work in. From smart factories to connected homes, AI now interacts directly with the physical world. Integrating AI in a physical environment means linking software intelligence with sensors, data, and human activity. It’s not just about automation—it’s about building systems that learn and respond in real time. Doing this successfully requires a practical plan that connects purpose, data, and design into one continuous process of learning and refinement.

Before integration begins, the first step is to study where AI will operate and why it’s needed. Every environment—whether it’s a warehouse, hospital, or home—has unique rhythms, constraints, and safety considerations. Observing how people and systems interact helps define where AI can add value instead of complexity. The goal is to identify specific problems that intelligent automation can solve.

Sensors, cameras, and IoT devices form the foundation for this connection. These devices collect real-world information—like motion, light, or temperature—and translate it into digital data that AI systems can analyze. For example, occupancy sensors in a smart office can detect usage patterns, while AI adjusts lighting and climate accordingly. In industrial settings, robots rely on distance sensors and vision systems to move safely among humans and objects.

Defining the main purpose of AI integration early helps shape its behavior. Whether the goal is energy efficiency, safety, or workflow improvement, clear intent ensures the AI system is trained for practical results rather than abstract analysis. A thoughtful start saves time and reduces the risk of overcomplicating the project later.

Once goals are set, the next stage focuses on data—the foundation of any intelligent system. AI depends on reliable, structured information to understand its environment. Creating a data framework involves identifying what to collect, how to collect it, and how to process it efficiently.

A well-designed data map helps clarify this process. It lists every information source, such as sensors, cameras, or user inputs, and shows how they connect to the AI model. The closer this digital map reflects the real environment, the better the system performs. A logistics robot, for instance, needs data about space layout, inventory positions, and worker activity to plan routes accurately.

Data quality is critical. Real-world data is often inconsistent—sensors may produce gaps or noise. Cleaning and organizing it ensures the AI learns from accurate signals. Machine learning models can then be trained on this refined data to recognize patterns and predict outcomes.

Balancing where data is processed matters too. Edge computing—processing data near where it’s collected—reduces delays and keeps AI responsive. A robotic system might use edge processing for obstacle detection but send broader insights to the cloud for longer-term learning. This mix of local and remote intelligence keeps operations both quick and informed.

This step turns planning into practice. Implementation joins sensors, algorithms, and human workflows into one system that functions smoothly in the real world. The process includes setting up the hardware, linking it with software, and testing interaction with people and space.

Hardware deployment involves installing devices such as cameras, sensors, and communication modules. Placement is crucial; one poorly positioned sensor can create blind spots or false readings. The physical setup should match the AI’s intended perspective—seeing and sensing what matters most.

Once the hardware is in place, software integration connects every component into a functioning whole. Middleware platforms often help bridge differences between devices, ensuring data moves consistently across systems. After integration, trial runs help measure how the AI behaves under normal conditions. A robotic arm, for example, may begin by performing simple motions before handling precision tasks.

Human involvement remains central. AI should complement human effort, not replace it. Training staff to understand the system, interpret alerts, and intervene when needed keeps operations balanced and safe. AI integration succeeds only when humans trust and understand the technology they’re working with.

Safety and oversight should be built in from the start. Fail-safes, such as emergency stops, monitoring dashboards, and alert systems, reduce the risk of malfunction. Testing under different conditions—lighting, movement, or temperature changes—helps ensure the AI performs reliably before full deployment.

Even after deployment, integration is an ongoing process. Physical environments shift over time—machines wear down, layouts change, and data patterns evolve. Continuous monitoring ensures that the AI system adapts instead of becoming outdated.

Routine performance checks help identify when predictions start drifting from reality. If an AI system in a smart warehouse begins misreading space usage, retraining it with updated data restores accuracy. Periodic evaluation and retraining keep the system aligned with actual conditions.

The physical hardware supporting AI must be maintained as well. Sensors may need recalibration, cameras cleaned, or firmware updated to maintain precision. These small adjustments preserve the quality of data flowing into the AI model.

Cybersecurity must never be overlooked. More connected devices mean greater exposure to digital risks. Encrypted communication, regular software updates, and restricted access protect the system and prevent unauthorized control or data misuse.

Once stable, the system can be expanded. A pilot setup can grow from a single room to an entire facility. Scaling should follow proven results, not assumptions. Gradual expansion allows improvements based on experience rather than guesswork. The result is an intelligent system that grows stronger as it learns from the world around it.

Integrating AI in a physical environment brings intelligence into daily operations in a tangible way. It starts with understanding the space, continues through solid data design and careful setup, and matures through ongoing monitoring. The process demands collaboration between people and technology rather than separation. When done thoughtfully, AI doesn’t dominate the environment—it blends with it, responding naturally to human needs and real-world changes. The outcome is a space that thinks, adapts, and improves alongside the people who depend on it.

Advertisement

Explore the role of a Director of Machine Learning in the financial sector. Learn how machine learning is transforming risk, compliance, and decision-making in finance

Discover 26 interesting ways to use ChatGPT in daily life—from learning new skills and writing better content to planning trips and improving productivity. This guide shows how this AI tool helps simplify tasks, boost creativity, and make your workday easier

Explore how multimodal GenAI is reshaping industries by boosting creativity, speed, and smarter human-machine interaction

Microsoft’s in-house Maia 100 and Cobalt CPU mark a strategic shift in AI and cloud infrastructure. Learn how these custom chips power Azure services with better performance and control

If you want to assure long-term stability and need a cost-effective solution, then think of building your own GenAI applications

How the Vertex AI Model Garden supports thousands of open-source models, enabling teams to deploy, fine-tune, and scale open LLMs for real-world use with reliable infrastructure and easy integration

How the semi-humanoid AI service robot is reshaping commercial businesses by improving efficiency, enhancing customer experience, and supporting staff with seamless commercial automation

Learn the top eight impacts of global privacy laws on small businesses and what they mean for your data security in 2025.

How the ORDER BY clause in SQL helps organize query results by sorting data using columns, expressions, and aliases. Improve your SQL sorting techniques with this practical guide

Explore how generative AI transforms content, design, healthcare, and code development with practical tools and use cases

How Orca LLM challenges the traditional scale-based AI model approach by using explanation tuning to improve reasoning, accuracy, and transparency in responses

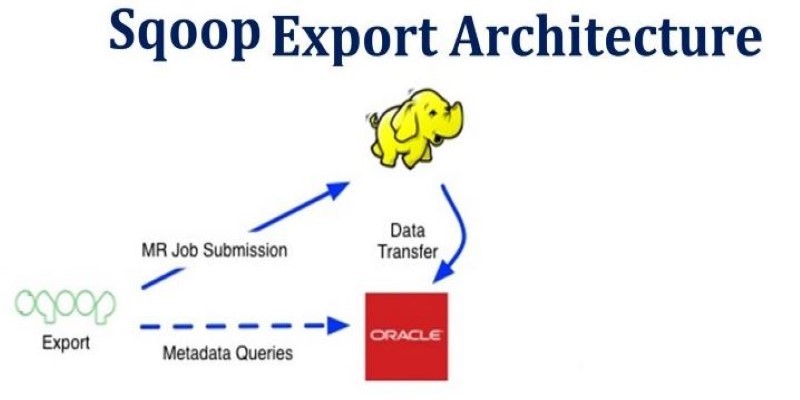

How Apache Sqoop simplifies large-scale data transfer between relational databases and Hadoop. This comprehensive guide explains its features, workflow, use cases, and limitations